Why Photoshop and Luminar's AI tools aren't quite ready to kill real photography

AI photo editing is here, but the machines won't take over just yet

For some photographers, the arrival of Photoshop 2021 and Luminar AI might feel a bit like the moment Skynet became self-aware in The Terminator. Thanks to new machine learning skills, the best photo editors are no longer just tools – they're increasingly becoming autonomous editors that stretch the definition of what a photo actually is.

I've been editing photographs for a long time. To give you an idea, my idea of a good time is scrolling through screengrabs of old versions of Paint Shop Pro and fondly recalling the first time I cut-and-pasted the head of my old French teacher on the body of an elephant. Times change and now I get paid to edit photos. I shoot them myself (the fun bit), and then retouching, comping, post-production, you name it, I do that as well.

But while I've seen the likes of Photoshop get increasingly impressive over the past couple of decades, this is the year that everything changes. Today, image editing, and particularly photo editing, is a peculiar mix of personal taste, acknowledging the tastes of the outside world, and balancing the demands of the client. But the photographer or retoucher is still the conduit through which everything flows.

Now, beginning with Photoshop 2021 a few months ago, and the recent release of Luminar AI, picture editors everywhere – amateur and professional – might be wondering just how long their skills will remain an asset. It’s the rise of machine-led photo editing – but as we'll discover, there'll still be a big role for us fleshy human retouchers for a while yet.

- These are the best photo editing apps you can download right now

Space oddities

A great example of the debates these new 'AI' tools are sparking in photography circles was on Reddit the other day.

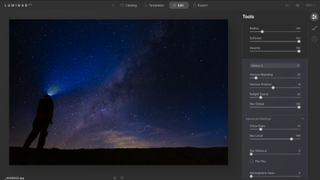

Over on the r/itookapicture subreddit, there was a heavily upvoted picture of the Milky Way over the Griffith Observatory in LA. The picture was a stunner; a beautifully symmetrical shot with the galactic core of the Milky Way just hidden behind the building, a soft orange hum of the nocturnal city behind it.

But after the initial flood of upvotes, the grumbling began. The centre of the Milky Way wouldn’t appear that far below the horizon. The blending of the exposure was suspicious. More than anything, given LA’s historic levels of light pollution, how did the photographer get such a clear frame of it. What was their secret?

Get daily insight, inspiration and deals in your inbox

Get the hottest deals available in your inbox plus news, reviews, opinion, analysis and more from the TechRadar team.

The secret was AI, which you will have noticed is now everywhere. From those annoying chatbots when you’re online shopping, to Instagram’s mysterious algorithm deciding which posts you see first, artificial intelligence has become a shorthand for “any time a computer makes a decision instead of you". And it was in full effect when our anonymous Redditor took a perfectly good night-time image and dropped an egregious Milky Way background down.

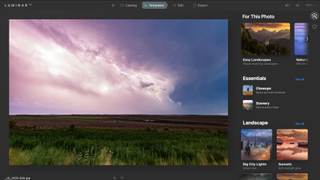

Take an image with a boring sky, choose a more interesting one, and bosh: your picture is rejuvenated, enlivened, headed for the front page of Reddit or the top of the Instagram feed.

Of course, photo fakery has been around since the beginning of photography – but what’s interesting here is just how easy it is to make a genuinely photorealistic effect using the likes of Luminar AI, which produced the image in question.

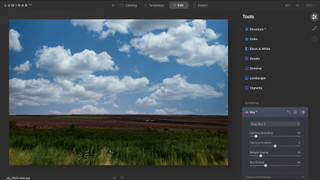

I reviewed Luminar AI a few weeks ago, and as ever, deployed my arguably unhealthy brand of skepticism headed in. Blown away barely begins to cover it – take an image with a boring sky, choose a more interesting one, and bosh: your picture is rejuvenated, enlivened, headed for the front page of Reddit or the top of the Instagram feed.

- Read our in-depth Luminar AI review

AI in the sky

Of course, photographers are uneasy about this. We're always uneasy about something – whether it's 'where we put that lens cap?', or 'did I charge my battery?' – so it’s only natural that micro-concerns give way to proper anxiety when it comes to the security of our jobs.

I’ve done quite a bit of what we’ll call sky photography. I’ve chased storms across the USA, I’ve shot the northern lights – and taught others how to do it – and I’ve photographed the Milky Way. Doing any of those things well is very difficult – you need to buy the right equipment, you need to do your homework (thanks, PhotoPills!), and you need to convince your client that you’re the right person for the job, and that they should pay you for it.

Why on Earth would anyone pay for a shot of the Milky Way by a professional photographer with a car-value’s-worth of equipment in their bag, when a photographer with a smartphone and Luminar AI can do pretty much the same thing? Just like Michael Jordan being overlooked for MVP in favor of Karl Malone in The Last Dance, it’s hard not to take it personally.

And now everyone’s getting in on the act. Almost immediately after trying out Luminar AI for the first time, I headed over to Photoshop’s new AI features, which include – you guessed it – sky replacement. If anything, Photoshop’s sky replacement is even better than Luminar’s (the automatic horizon masking is just bonkers in its accuracy).

Things are even bleaker for portrait photographers. Remember all those years you spent figuring out just how to speak to nervous actors getting their first headshots, or convincing the father of the bride that he doesn’t have to do pistol-fingers in every picture?

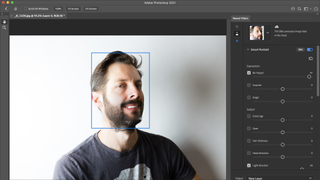

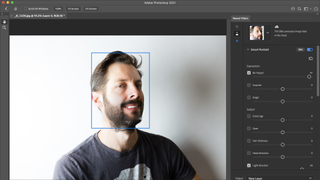

Forget it all, because both Luminar AI and Photoshop now allow you to make changes to your subjects that seem all-but designed to enrage the body positivity crowd. Subject too fat? Make them thinner. Not smiley enough? Introduce a smile or make them happier (or sadder) with a slider. There’s even an age slider, allowing you to shave a few – or a lot – of years off your subject. Or, for those looking to inflict maximum psychological damage, you can add years as well.

Photoshop can make a passable effort at understanding what a human face should look like, but the person in your picture will definitely see that you’ve attacked them with a computer.

You can write off all that time spent learning lighting as well. Three-point lighting? Reflectors? Bounce boards? Pish. Just drag the Light direction slider along and you’ll watch as Photoshop calculates where the light source was in your original picture, then rotates it around your subject in more or less real time. It’s not magic, obviously, but it also sort of… is?

So, forget everything you know. Going forward, photography will be the preserve of armchair warriors, taking average images in the field with no care for weather, lighting or composition, and then perfecting them on a computer afterwards. Photography is dead.

End of the code

At the same time, of course, it’s not. Dropping a sky onto an image will never match the satisfaction of matching a weather report to a map and getting your position just air-kiss correct.

Ditto, the ins-and-outs of good portrait photography. Photoshop can make a passable effort at understanding approximately what a human face should look like, but the person in your picture will definitely see that you’ve attacked them with a computer.

And don’t get me started on how Photoshop will add teeth to an image to make your portrait subject look smilier (above). Forget about editorial ethics, the effect is terrifying.

If you’re a real estate agent, being able to drop in a nicer-looking sky is a liberating time-saver, honesty be damned. For one thing, you’re an estate agent, and for another, who cares what the weather was like when a house was photographed?

It's the same for travel brochure designers, who can still use bespoke images of resorts or locations without hoping their photographer happens to be there on a good-looking day.

For everything else, the computers aren’t going to have my job just yet – the effect is never quite as good as the real thing. Reddit will back me up on that.

- These are the best photo editors in the world right now

Dave is a professional photographer whose work has appeared everywhere from National Geographic to the Guardian. Along the way he’s been commissioned to shoot zoo animals, luxury tech, the occasional car, countless headshots and the Northern Lights. As a videographer he’s filmed gorillas, talking heads, corporate events and the occasional penguin. He loves a good gadget but his favourite bit of kit (at the moment) is a Canon EOS T80 35mm film camera he picked up on eBay for £18.

Most Popular