This tiny UK startup chipmaker is targeting Intel and Nvidia with a monster AI CPU

British-made chip offers 250 TeraFLOPS of FP16.16 performance that beats Nvidia A100 in complexity

Graphcore, a U.K.-based AI computing startup, has unveiled its new Colossus MK2 GC200 intelligence processing unit (IPU) featuring 59.4 billion transistors, making it currently the most complex chip in the world.

The IPU was designed specifically for machine intelligence tasks and can scale out to offer 1 PetaFLOPS of FP16.16 compute horsepower in a 1U machine or up to 16 ExaFLOPS in a datacenter.

- Best business laptops: top devices for working from home, SMB and more

- How to build a gaming PC powerful enough to take on the PlayStation 5 and Xbox Series X

- Best workstations: Powerful PCs for professionals available today

The most complex

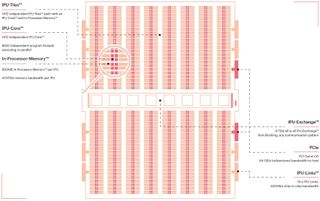

The Graphcore Colossus MK2 GC200 IPU packs 1,472 independent IPU cores with SMT that can handle 8,832 separate parallel threads. Each core is equipped with its own memory and therefore the chip carries 900 MB of SRAM with an aggregated bandwidth of 47.5 TB/s per chip. Graphcore’s GC200 IPUs also features 10 IPU links to connect to other GC200 chips at up to 320 GB/s speed as well as a PCIe 4.0 x16 interface.

Graphcore’s Colossus MK2 GC200 chip us made using TSMC’s 7 nm process technology and contains 59.4 billion transistors, more than Nvidia’s A100 GPU that has 54 billion transistors.

Up to 16 ExaFLOPS

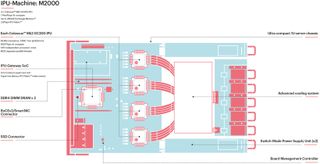

Each Colossus MK2 GC200 IPU can provide 250 TeraFlops of AI compute performance at FP16.16 and FP16.SR (stochastic rounding) precision as well as 62.5 TeraFLOPS of single-precision FP32 performance. Four of GC200 chips inside Graphcore’s IPU-M2000 system (which comes with 448 GB of exchange DRAM to handle large workloads) offer up to 1 PetaFLOPS of FP16.16/FP16.SR.

“Our Colossus IPUs are unique in having support for Stochastic Rounding on the arithmetic that is supported in hardware and runs at the full speed of the processor,” said Nigel Toon, CEO of Graphcore. “This allows the Colossus Mk2 IPU to keep all arithmetic in 16-bit formats, reducing memory requirements, saving on read and write energy and reducing energy in the arithmetic logic, while delivering full accuracy Machine Intelligence results.”

Customers who need more performance can also order IPU-POD64 systems powered by 16 IPU-M2000 machines (and therefore providing 16 PetaFLOPS), whereas large organizations can scale out to 64,000 IPUs for 16 ExaFLOPS at FP16.16/FP16.SR.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Since IPUs cannot run operating systems, Grapcore’s IPU-M2000 box(es) have to be connected to a regular CPU-based node(s). Meanwhile, users are not confined to a fixed ratio of CPU to Machine Intelligence compute at a server level and can adjust their hardware mix.

To take advantage of Graphcore’s Colossus IPUs, one needs to use the company’s proprietary Polar software tailor-made for the company’s architecture with particular silicon in mind. Graphcore says that it has put a lot of effort into optimizing its software stack in a bid to maximize performance one can extract from its hardware and make the whole solution easy to use.

Available in Q4

Graphcore’s IPU-M2000 and IPU-POD64 systems are available to pre-order now with production volume shipments committing in the fourth quarter. Interested parties can get access and evaluate IPU-POD systems in the cloud offered by Cirrascale.

At present, select customers are already evaluating Graphcore’s Colossus MK2 platform.

“J.P. Morgan is evaluating Graphcore’s technology to see if our solutions can accelerate their advances in AI, specifically, in the NLP and speech recognition arenas,” a statement by the company reads.

- Best mobile workstations: the most powerful laptops for businesses

Via The Verge

Anton Shilov is the News Editor at AnandTech, Inc. For more than four years, he has been writing for magazines and websites such as AnandTech, TechRadar, Tom's Guide, Kit Guru, EE Times, Tech & Learning, EE Times Asia, Design & Reuse.